文章简介:通过实验,探索 BDP、tcp buffer、RT 三者之间的关系;探讨如何控制吞吐;实验 curl 限速是如何工作的;

按照:TCP 性能和发送接收窗口、Buffer 的关系 | plantegg 实验调整 RT、控制死内核 tcp buffer,让速度随着 RT 增加慢下来。实验大致过程是先创建一个比较大的文件,使用 python 开一个 http server,调整 tcp 的配置信息,在另一台机器上使用 curl 下载这个大文件,使用 tcpdump 抓包后使用 wireshark 分析结果。

搭建环境

我的 Macbook M1 下使用 vagrant + vmware 创建虚拟机如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

|

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

(1..2).each do |i|

#定义节点变量

config.vm.define "node#{i}" do |node|

# box 配置

node.vm.box = "bento/rockylinux-9"

# 设置虚拟机的主机名

node.vm.hostname = "node#{i}"

# 设置虚拟机的 IP

# node.vm.network "private_network", ip: "192.168.60.#{10+i}"

node.vm.synced_folder ".", "/vagrant", disabled: true

# vagrant rsync-auto --debug

# https://developer.hashicorp.com/vagrant/docs/synced-folders/rsync

node.vm.synced_folder ".", "/vagrant", type: "rsync",

rsync__exclude: [".git/", "docs/", ".vagrant/"],

rsync__args: ["--verbose", "--rsync-path='sudo rsync'", "--archive", "-z"]

node.vm.provider :vmware_desktop do |vmware|

vmware.vmx["ethernet0.pcislotnumber"] = "160"

end

# 设置主机与虚拟机的共享目录

# VirtaulBox 相关配置

node.vm.provision "shell", inline: <<-SHELL

echo "hello"

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.ustc.edu.cn/rocky|g' \

-i.bak \

/etc/yum.repos.d/rocky-extras.repo \

/etc/yum.repos.d/rocky.repo

yum install -y yum-utils

yum-config-manager --add-repo https://mirrors.ustc.edu.cn/docker-ce/linux/centos/docker-ce.repo

sed -i 's+https://download.docker.com+https://mirrors.ustc.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo

dnf makecache

# dnf install -y epel-release && dnf makecache

yum install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin tmux nc nmap

systemctl enable --now docker

# https://github.com/iovisor/bcc/blob/master/INSTALL.md#rhel---binary

# export PATH="/usr/share/bcc/tools:$PATH"

yum install -y bcc-tools

# https://tshark.dev/setup/install/

yum install -y tcpdump net-tools gdb dstat zip wireshark-cli

SHELL

end # end config.vm.define node

end # end each 3 node

end

|

共创建了两个 vm,一个是 VM1 和 VM2

1

2

3

4

5

6

7

8

9

|

export VM1=192.168.245.151

export VM2=192.168.245.152

# 生成 2g 的文件 test.txt

fallocate -l 2G test.txt # 生成 2g 文件,ls & du 都是 2g

python3 -m http.server 8089

# 在 VM2 中访问 vm 的这个文件

curl -v --output /tmp/test.txt http://{VM1}:8089/test.txt

|

当前的 sysctl 参数如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

|

sysctl -a | egrep "rmem|wmem|tcp_mem|adv_win|moderate"

net.core.rmem_default = 212992

net.core.rmem_max = 212992

net.core.wmem_default = 212992

net.core.wmem_max = 212992

net.ipv4.tcp_adv_win_scale = 1

net.ipv4.tcp_mem = 18852 25136 37704

net.ipv4.tcp_moderate_rcvbuf = 1

net.ipv4.tcp_rmem = 4096 131072 6291456

net.ipv4.tcp_wmem = 4096 4096 4096

net.ipv4.udp_rmem_min = 4096

net.ipv4.udp_wmem_min = 4096

vm.lowmem_reserve_ratio = 256 256 32 0 0

|

下面的实现会调整 rmem 和 wmem 的大小做相关的实验

先整体看下实验的结果:

| group |

rmem(bytes) |

window(bytes) |

rtt(ms) |

throughput(KB/s) |

dup ack(%) |

retransmission(%) |

outoforder(%) |

packetcount |

| benchmark |

4096 |

1040 |

2 |

3,079 |

0 |

0 |

0 |

66086 |

| 10ms |

4096 |

1040 |

10 |

108 |

0 |

0 |

0 |

2239 |

| 20ms |

4096 |

1040 |

20 |

58 |

0 |

0 |

0 |

1263 |

| 40ms |

4096 |

1040 |

40 |

30 |

0 |

0 |

0 |

644 |

| 80ms |

4096 |

1040 |

80 |

15 |

0 |

0 |

0 |

348 |

| 100ms |

4096 |

1040 |

100 |

12 |

0 |

0 |

0 |

283 |

| 1%lose |

4096 |

1040 |

2 |

1651 |

0.63% |

0.50% |

0.12% |

36264 |

| 10%lose |

4096 |

1040 |

2 |

202 |

250/5057=5.00% |

97/5057=1.90% |

152/5057=3.00% |

5057 |

实验 1 固定 rmem 和 wmem,增加延迟

1

2

|

sysctl -w "net.ipv4.tcp_rmem=4096 4096 4096" //最小值 默认值 最大值

sysctl -w "net.ipv4.tcp_wmem=4096 4096 4096"

|

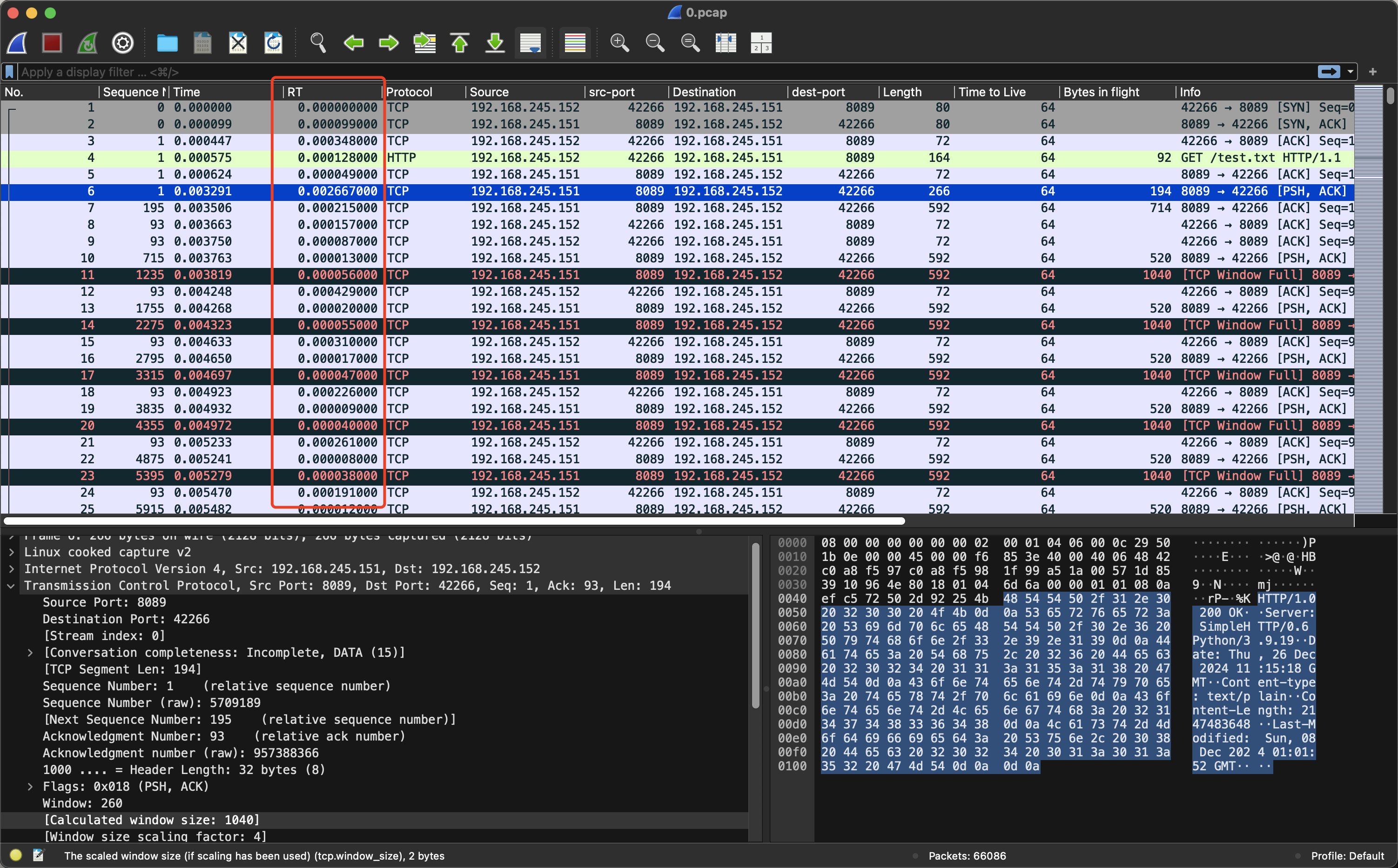

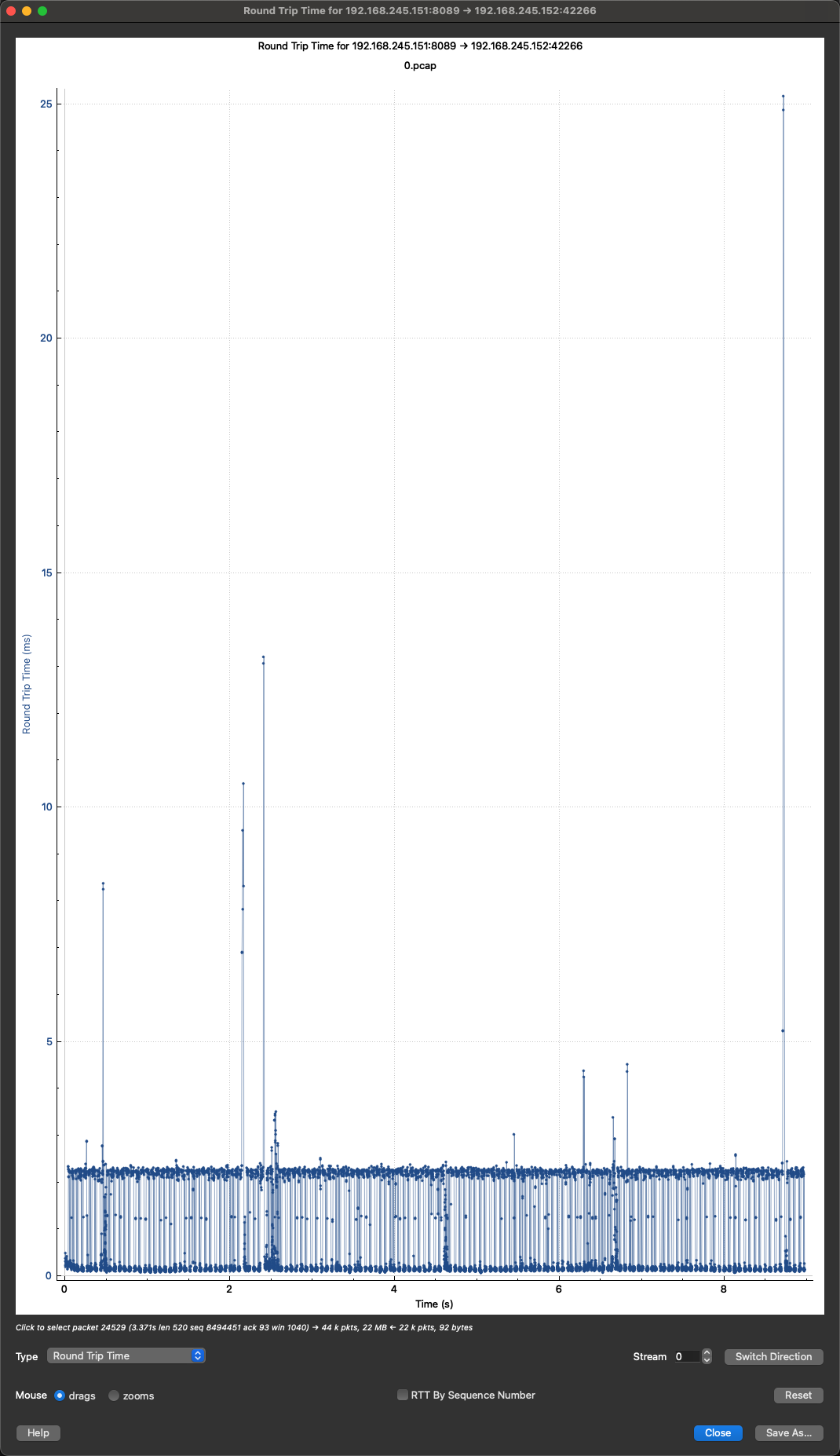

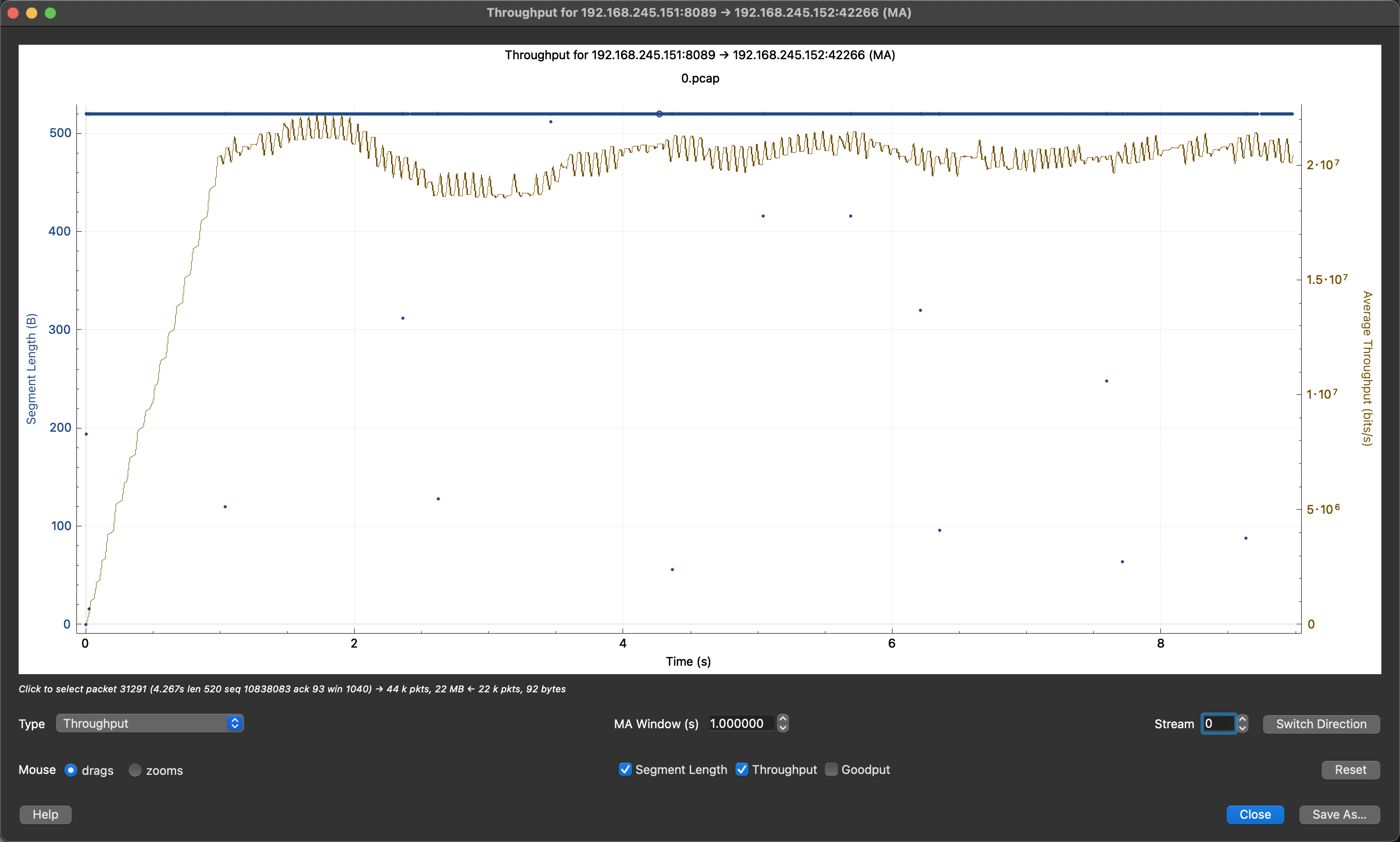

控制 rmem 和 wmem 在 4096,无延迟

吞吐为:2.110**7 bits/s = python3 -c ‘print(2.110**7/8/1024)’ = 2563.48 KB/s

吞吐为:2.110**7 bits/s = python3 -c ‘print(2.110**7/8/1024)’ = 2563.48 KB/s

TODO: 这个值跟 wireshark Capture File Properties 显示的 3,079KB/s 有出入,需要看看

RT 非常小

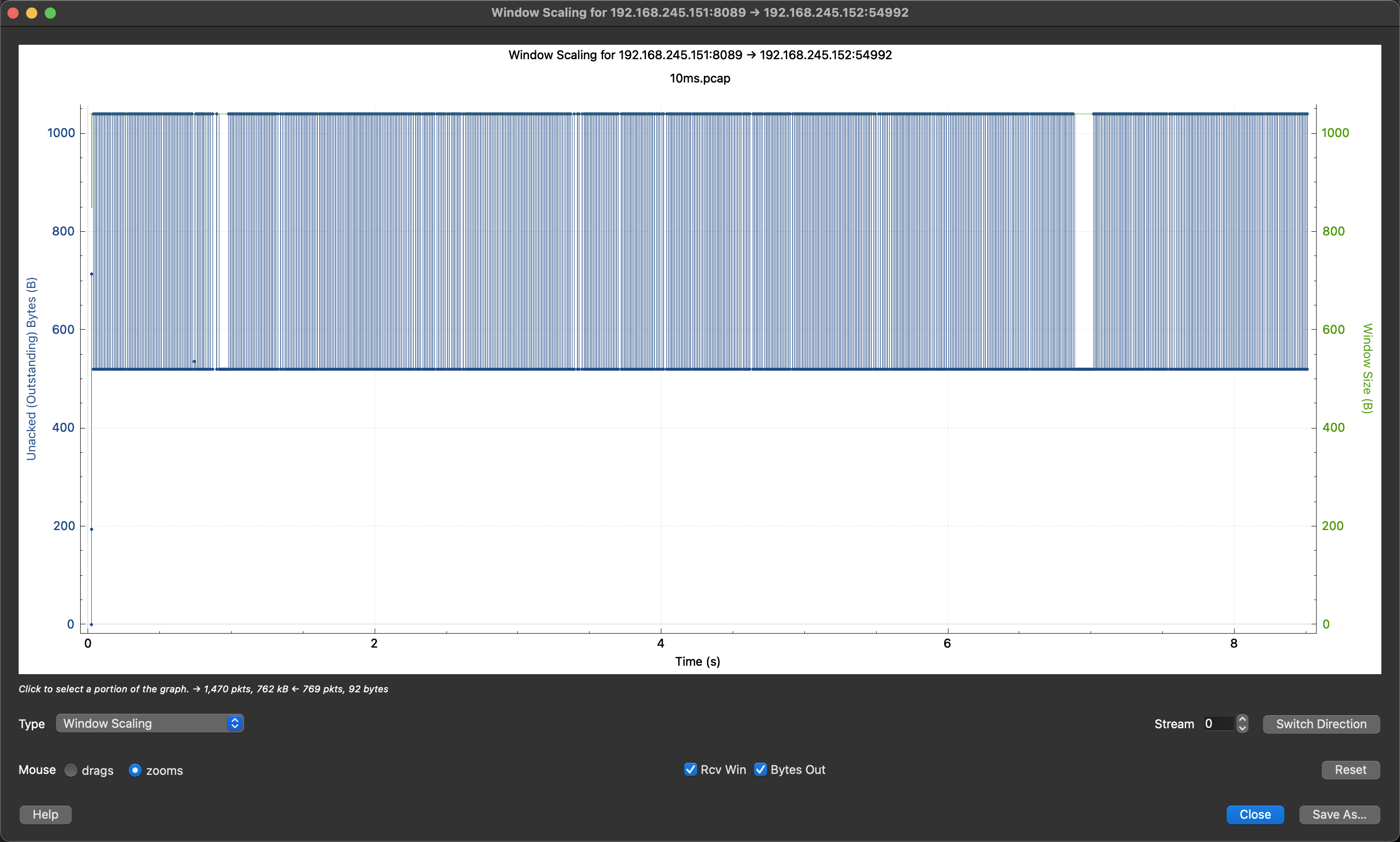

现在增加延迟到 10ms

1

|

tc qdisc add dev eth0 root netem delay 10ms

|

1

2

3

4

5

6

7

8

9

10

|

[root@node2 vagrant]# ping $VM1

PING 192.168.245.151 (192.168.245.151) 56(84) bytes of data.

64 bytes from 192.168.245.151: icmp_seq=1 ttl=64 time=24.5 ms

64 bytes from 192.168.245.151: icmp_seq=2 ttl=64 time=11.9 ms

64 bytes from 192.168.245.151: icmp_seq=3 ttl=64 time=10.7 ms

64 bytes from 192.168.245.151: icmp_seq=4 ttl=64 time=11.7 ms

64 bytes from 192.168.245.151: icmp_seq=5 ttl=64 time=11.4 ms

curl -v --output test.txt http://$VM1:8089/test.txt

92104bytes/s

|

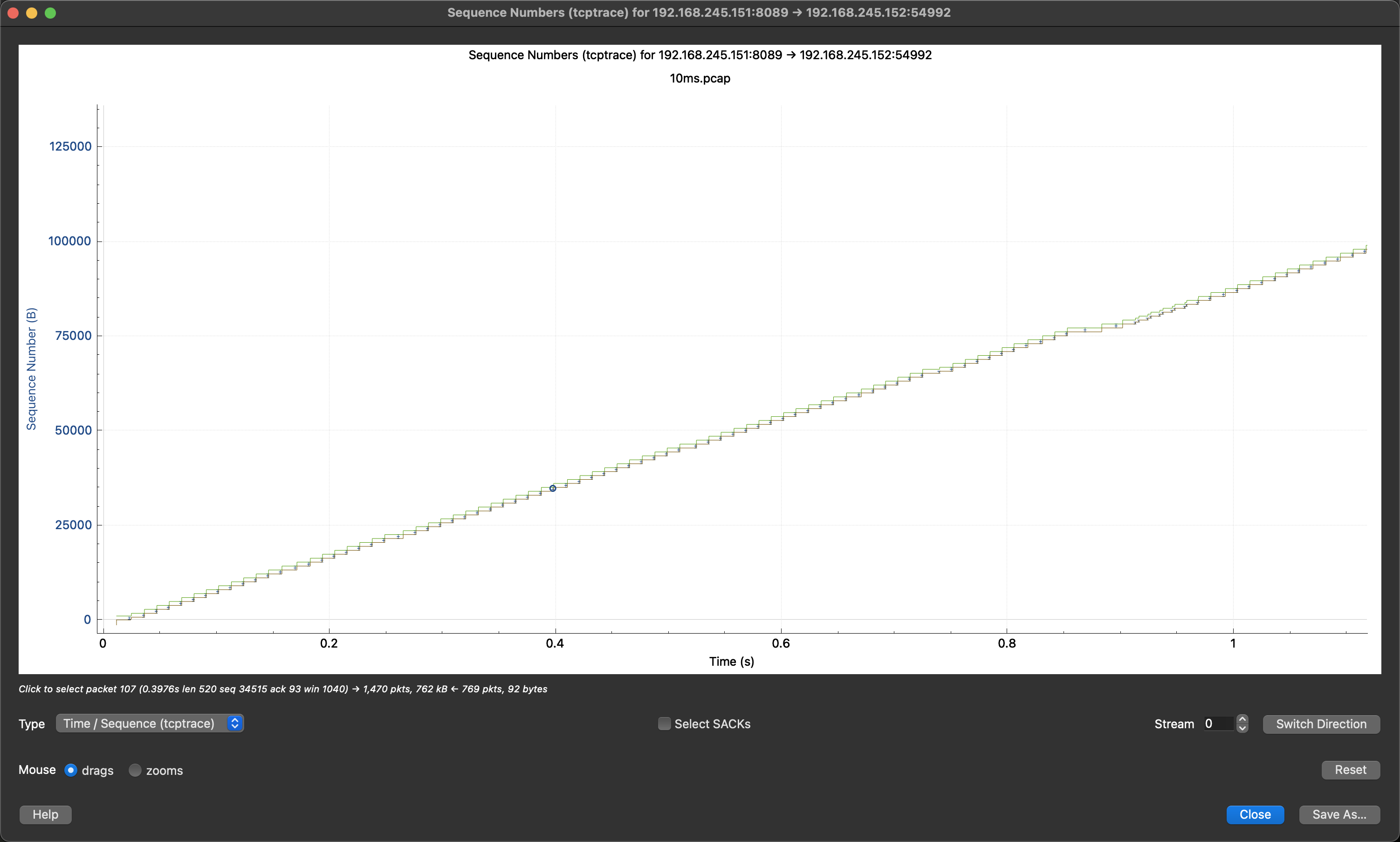

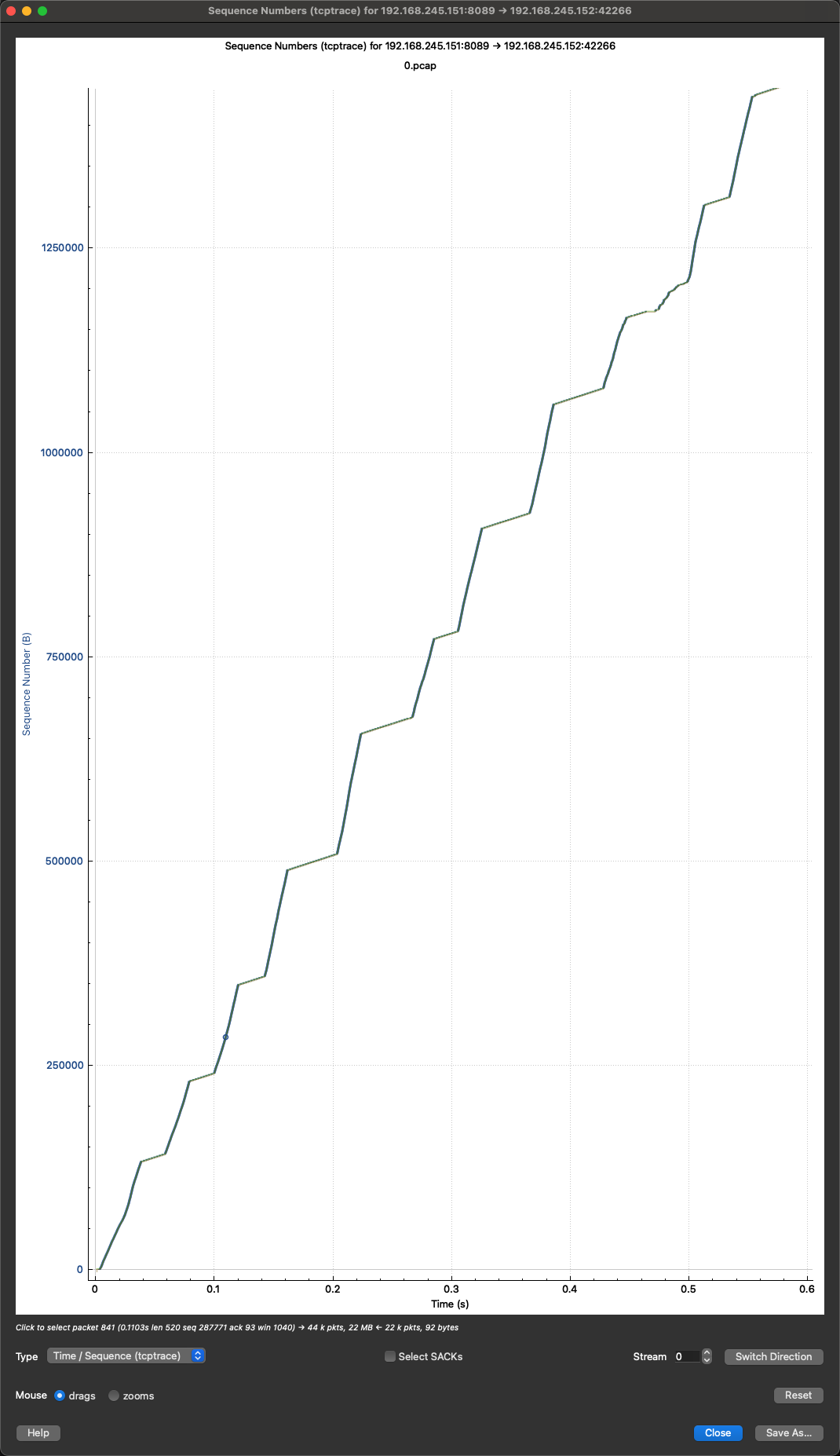

每个台阶的时间大概是 10ms,说明 10ms 生效了

可以看到吞吐不到 700000bits/s = python3 -c ‘print(700000/8/1024)’ = 85.45 KB/s

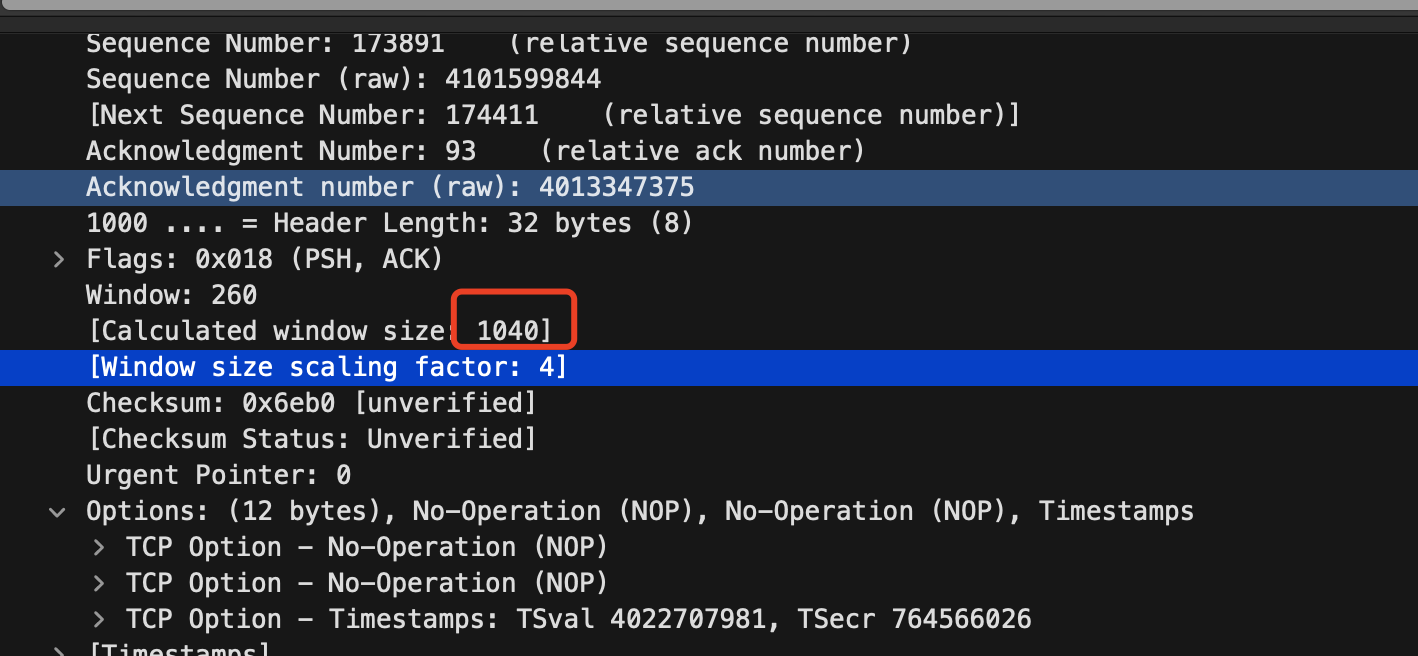

看报文中 Calculated window size: 1040

小结

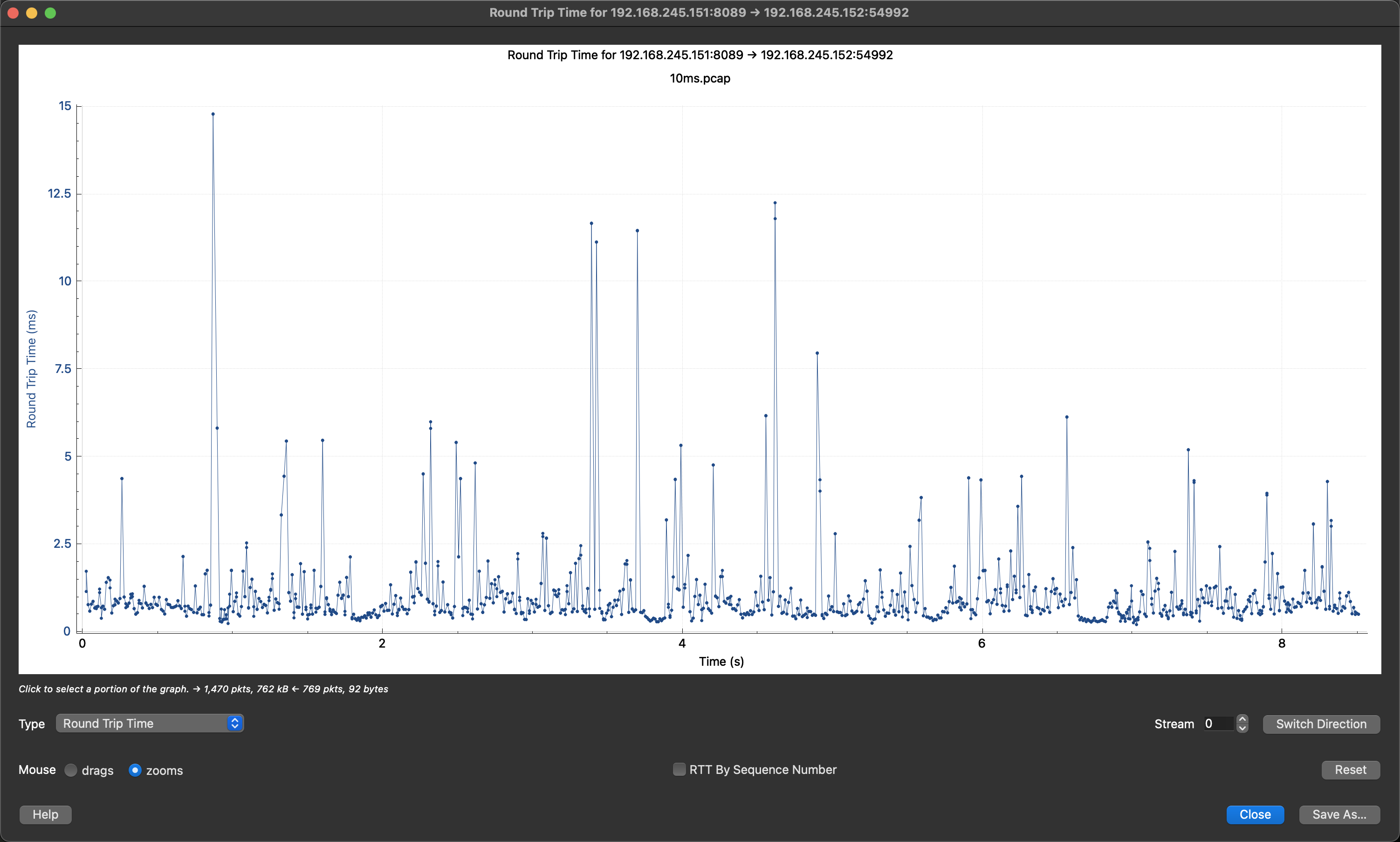

固定 rmem、wmem,随着延迟的增加,吞吐也随之降低。

做了几个实验,分别是 10ms、20ms、30ms、40ms,可以看到发包规律,假设发包大小及延迟的固定的(即排除网络波动),可以得到数学公式,使用 python 实现为:

1

2

3

|

'''window 单位 bytes, rtt 单位 ms'''

def throughput(window: int, rtt: int):

return window * (1000/rtt)/1024

|

如在 10ms、window size 1040bytes 下的吞吐为

1

2

3

|

print(throughput(1040, 10))

101.5625

|

101 相较 85.45 有一些误差,网络传输是动态变化的,故会有差距;可以使用此公式估算网络吞吐。

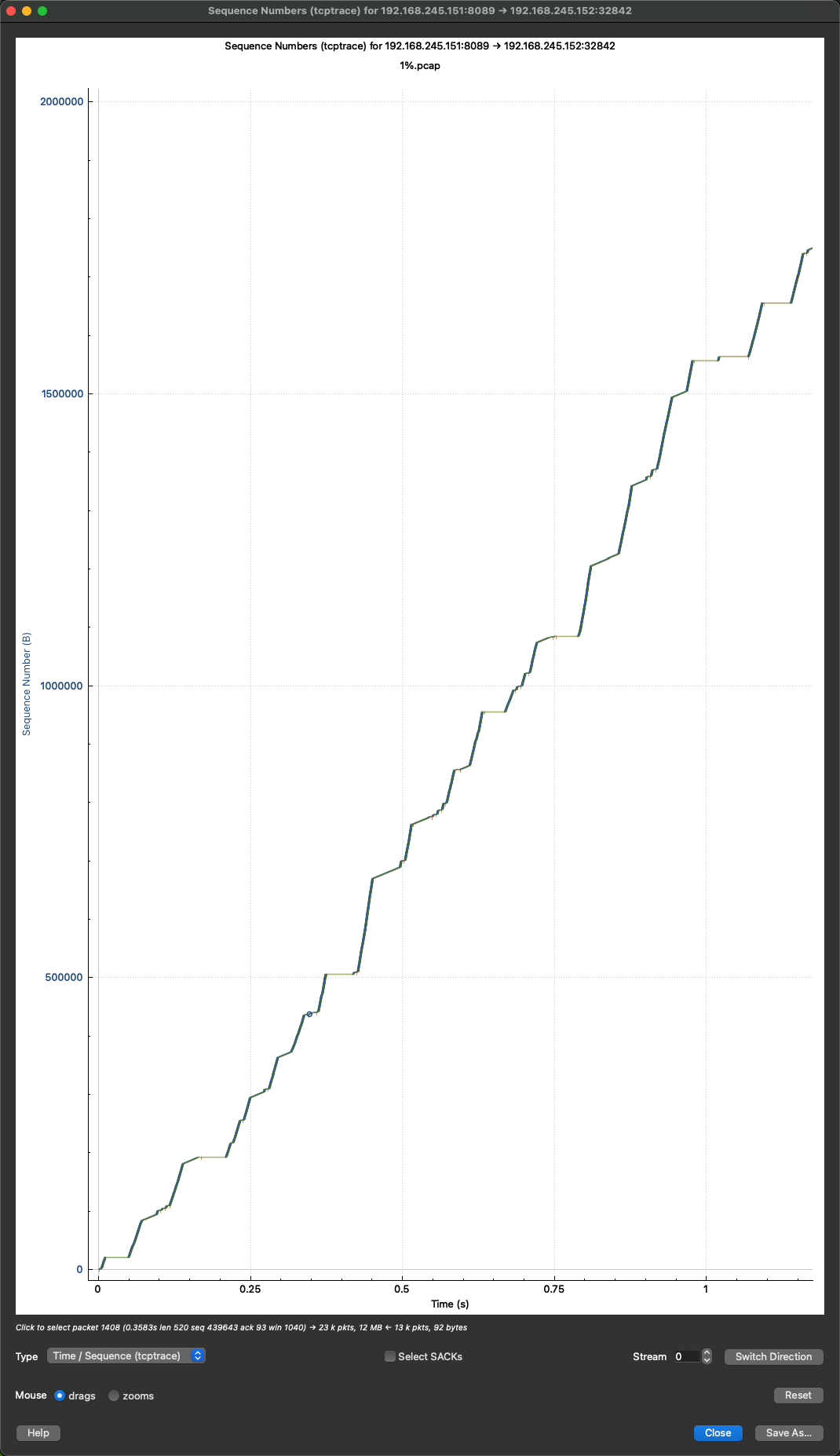

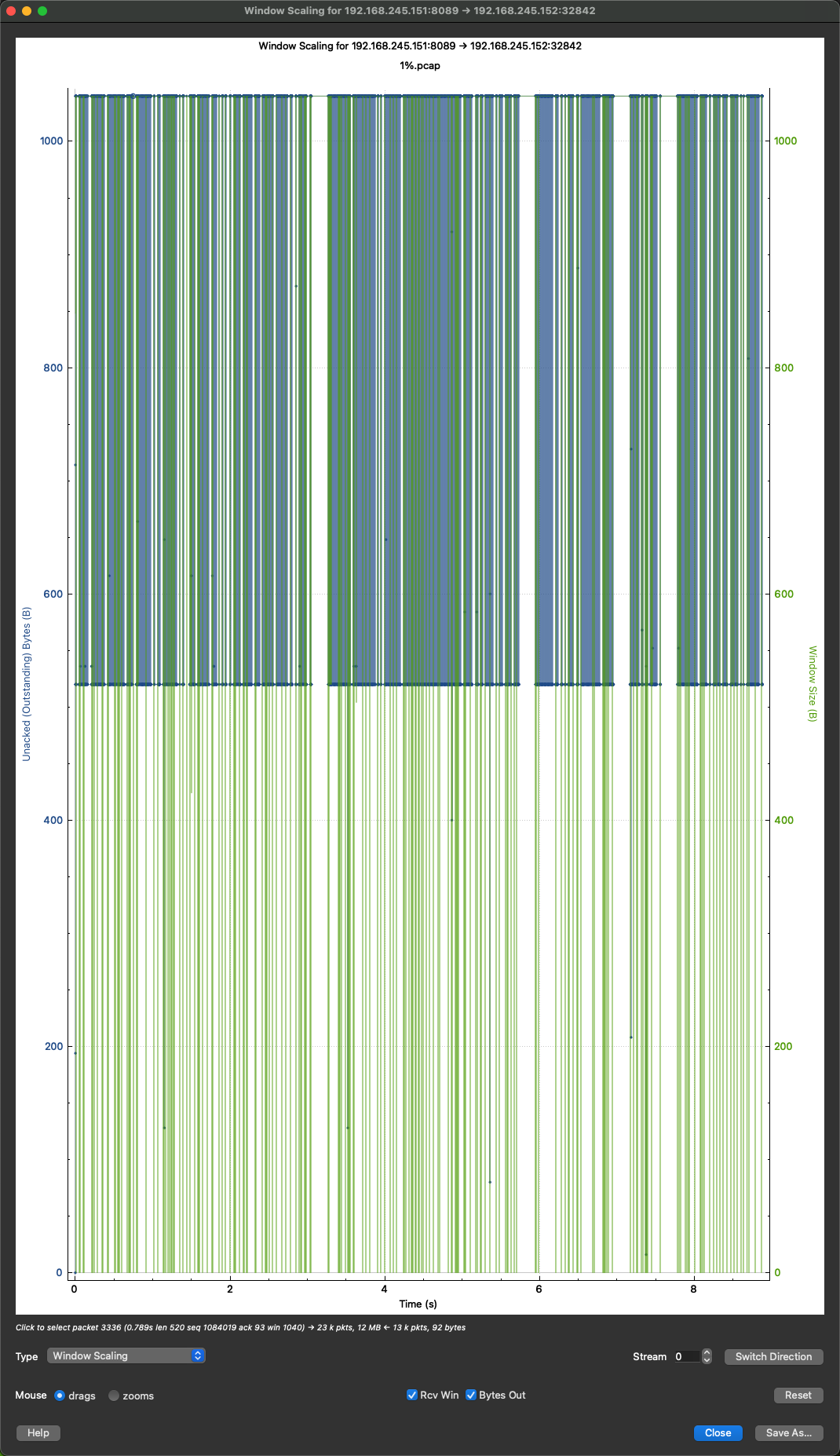

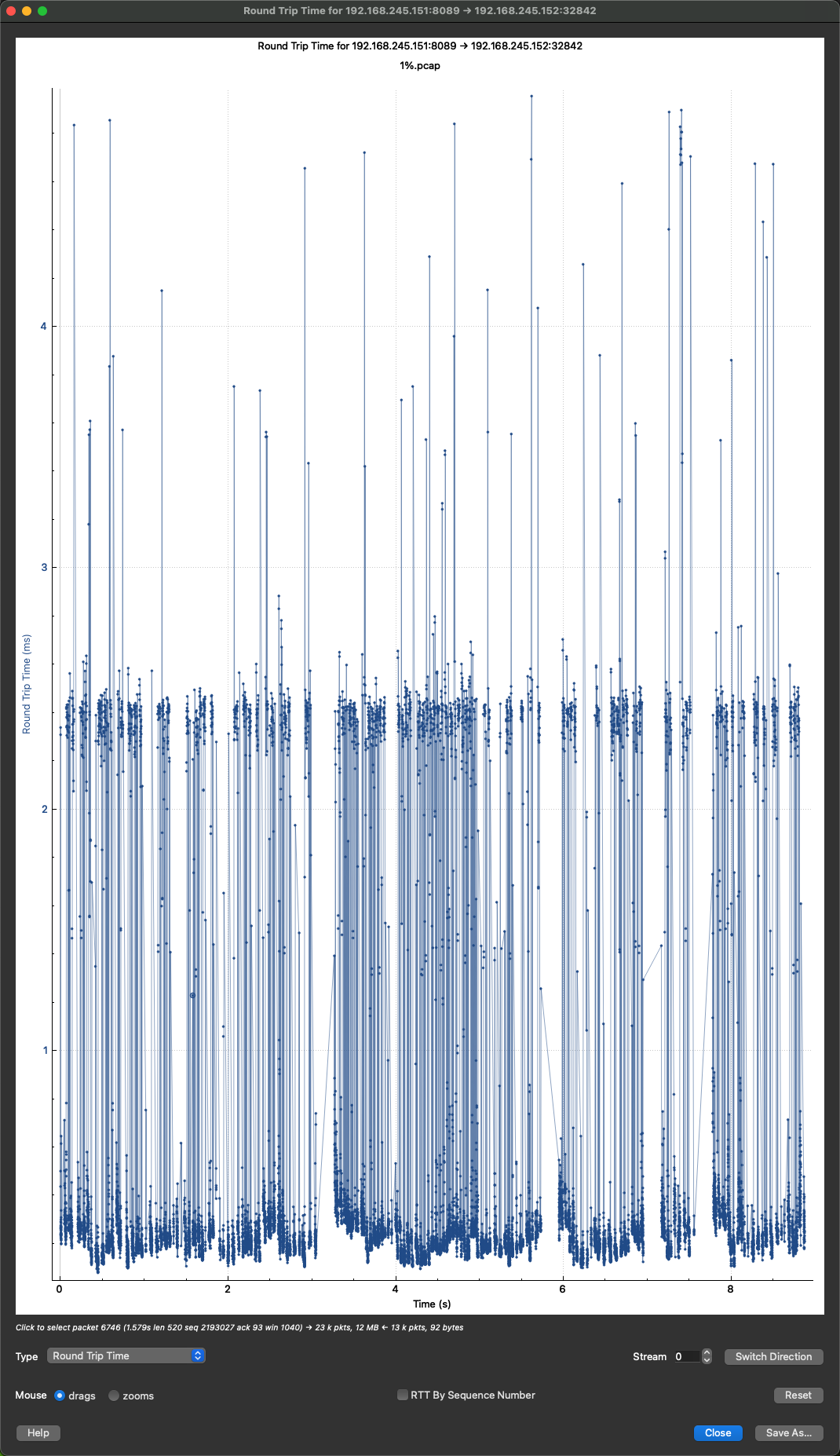

实验 2 固定 rmem 和 wmem,增加丢包率

seq 曲线非常弯,发包不稳定

丢包会让吞吐变的不稳定

小结

丢包会出现重传,重传很慢,吞吐波动比较大。随着丢包率的提高,波动会进一步变大,吞吐在减小。

实验 3 分别设置 rmem 和 wmem

TODO: 抓包试一下

可以看到对 client 的 rmem 限制的时候,会出现大量的 TCP Window Full,而对 server 的 wmem 限制则不会有非常大的影响。client rmem 读取后,需要一个 rtt 时间才能通知到 server 可以发送,故对吞吐的影响更大;而 server wmem 使用完后,只需等待发送完重新申请内存即可,延迟为内存延迟,对吞吐影响小。

如下图,可以更直观的展示 rmem/wmem 对吞吐影响的原因。

实验 4 curl 限速

基于版本 8.12.1

核心数据结构、关键函数、算法逻辑、调用流程 4 个方面分析

数据结构

1

2

3

4

5

6

7

8

9

10

11

|

struct pgrs_measure {

struct curltime start; /* when measure started */

curl_off_t start_size; /* the 'cur_size' the measure started at */

};

struct pgrs_dir {

curl_off_t total_size; /* total expected bytes */

curl_off_t cur_size; /* transferred bytes so far */

curl_off_t speed; /* bytes per second transferred */

struct pgrs_measure limit;

};

|

关键代码

Curl_pgrsStartNow 函数初始化限速相关的初始状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

void Curl_pgrsStartNow(struct Curl_easy *data)

{

data->progress.speeder_c = 0; /* reset the progress meter display */

data->progress.start = Curl_now();

data->progress.is_t_startransfer_set = FALSE;

data->progress.ul.limit.start = data->progress.start;

data->progress.dl.limit.start = data->progress.start;

data->progress.ul.limit.start_size = 0;

data->progress.dl.limit.start_size = 0;

data->progress.dl.cur_size = 0;

data->progress.ul.cur_size = 0;

/* clear all bits except HIDE and HEADERS_OUT */

data->progress.flags &= PGRS_HIDE|PGRS_HEADERS_OUT;

Curl_ratelimit(data, data->progress.start);

}

|

Curl_pgrsLimitWaitTime 计算在需要等待多少毫秒才能回到速度限制以下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

|

/*

* This is used to handle speed limits, calculating how many milliseconds to

* wait until we are back under the speed limit, if needed.

*

* The way it works is by having a "starting point" (time & amount of data

* transferred by then) used in the speed computation, to be used instead of

* the start of the transfer. This starting point is regularly moved as

* transfer goes on, to keep getting accurate values (instead of average over

* the entire transfer).

*

* This function takes the current amount of data transferred, the amount at

* the starting point, the limit (in bytes/s), the time of the starting point

* and the current time.

*

* Returns 0 if no waiting is needed or when no waiting is needed but the

* starting point should be reset (to current); or the number of milliseconds

* to wait to get back under the speed limit.

*/

timediff_t Curl_pgrsLimitWaitTime(struct pgrs_dir *d,

curl_off_t speed_limit,

struct curltime now)

{

curl_off_t size = d->cur_size - d->limit.start_size;

timediff_t minimum;

timediff_t actual;

if(!speed_limit || !size)

return 0;

/*

* 'minimum' is the number of milliseconds 'size' should take to download to

* stay below 'limit'.

*/

if(size < CURL_OFF_T_MAX/1000)

minimum = (timediff_t) (CURL_OFF_T_C(1000) * size / speed_limit);

else {

minimum = (timediff_t) (size / speed_limit);

if(minimum < TIMEDIFF_T_MAX/1000)

minimum *= 1000;

else

minimum = TIMEDIFF_T_MAX;

}

/*

* 'actual' is the time in milliseconds it took to actually download the

* last 'size' bytes.

*/

actual = Curl_timediff_ceil(now, d->limit.start);

if(actual < minimum) {

/* if it downloaded the data faster than the limit, make it wait the

difference */

return minimum - actual;

}

return 0;

}

/*

* Update the timestamp and sizestamp to use for rate limit calculations.

*/

void Curl_ratelimit(struct Curl_easy *data, struct curltime now)

{

/* do not set a new stamp unless the time since last update is long enough */

if(data->set.max_recv_speed) {

if(Curl_timediff(now, data->progress.dl.limit.start) >=

MIN_RATE_LIMIT_PERIOD) {

data->progress.dl.limit.start = now;

data->progress.dl.limit.start_size = data->progress.dl.cur_size;

}

}

if(data->set.max_send_speed) {

if(Curl_timediff(now, data->progress.ul.limit.start) >=

MIN_RATE_LIMIT_PERIOD) {

data->progress.ul.limit.start = now;

data->progress.ul.limit.start_size = data->progress.ul.cur_size;

}

}

}

|

state_performing 实际执行读写 socket,到达限速要求,跳转到限速

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

|

static CURLMcode state_performing(struct Curl_easy *data,

struct curltime *nowp,

bool *stream_errorp,

CURLcode *resultp)

{

char *newurl = NULL;

bool retry = FALSE;

timediff_t recv_timeout_ms = 0;

timediff_t send_timeout_ms = 0;

CURLMcode rc = CURLM_OK;

CURLcode result = *resultp = CURLE_OK;

*stream_errorp = FALSE;

/* check if over send speed */

if(data->set.max_send_speed)

send_timeout_ms = Curl_pgrsLimitWaitTime(&data->progress.ul,

data->set.max_send_speed,

*nowp);

/* check if over recv speed */

if(data->set.max_recv_speed)

recv_timeout_ms = Curl_pgrsLimitWaitTime(&data->progress.dl,

data->set.max_recv_speed,

*nowp);

if(send_timeout_ms || recv_timeout_ms) {

Curl_ratelimit(data, *nowp);

multistate(data, MSTATE_RATELIMITING);

if(send_timeout_ms >= recv_timeout_ms)

Curl_expire(data, send_timeout_ms, EXPIRE_TOOFAST);

else

Curl_expire(data, recv_timeout_ms, EXPIRE_TOOFAST);

return CURLM_OK;

}

/* read/write data if it is ready to do so */

result = Curl_sendrecv(data, nowp);

if(data->req.done || (result == CURLE_RECV_ERROR)) {

/* If CURLE_RECV_ERROR happens early enough, we assume it was a race

* condition and the server closed the reused connection exactly when we

* wanted to use it, so figure out if that is indeed the case.

*/

CURLcode ret = Curl_retry_request(data, &newurl);

if(!ret)

retry = !!newurl;

else if(!result)

result = ret;

if(retry) {

/* if we are to retry, set the result to OK and consider the

request as done */

result = CURLE_OK;

data->req.done = TRUE;

}

}

else if((CURLE_HTTP2_STREAM == result) &&

Curl_h2_http_1_1_error(data)) {

CURLcode ret = Curl_retry_request(data, &newurl);

if(!ret) {

infof(data, "Downgrades to HTTP/1.1");

streamclose(data->conn, "Disconnect HTTP/2 for HTTP/1");

data->state.httpwant = CURL_HTTP_VERSION_1_1;

/* clear the error message bit too as we ignore the one we got */

data->state.errorbuf = FALSE;

if(!newurl)

/* typically for HTTP_1_1_REQUIRED error on first flight */

newurl = strdup(data->state.url);

/* if we are to retry, set the result to OK and consider the request

as done */

retry = TRUE;

result = CURLE_OK;

data->req.done = TRUE;

}

else

result = ret;

}

if(result) {

/*

* The transfer phase returned error, we mark the connection to get closed

* to prevent being reused. This is because we cannot possibly know if the

* connection is in a good shape or not now. Unless it is a protocol which

* uses two "channels" like FTP, as then the error happened in the data

* connection.

*/

if(!(data->conn->handler->flags & PROTOPT_DUAL) &&

result != CURLE_HTTP2_STREAM)

streamclose(data->conn, "Transfer returned error");

multi_posttransfer(data);

multi_done(data, result, TRUE);

}

else if(data->req.done && !Curl_cwriter_is_paused(data)) {

const struct Curl_handler *handler = data->conn->handler;

/* call this even if the readwrite function returned error */

multi_posttransfer(data);

/* When we follow redirects or is set to retry the connection, we must to

go back to the CONNECT state */

if(data->req.newurl || retry) {

followtype follow = FOLLOW_NONE;

if(!retry) {

/* if the URL is a follow-location and not just a retried request then

figure out the URL here */

free(newurl);

newurl = data->req.newurl;

data->req.newurl = NULL;

follow = FOLLOW_REDIR;

}

else

follow = FOLLOW_RETRY;

(void)multi_done(data, CURLE_OK, FALSE);

/* multi_done() might return CURLE_GOT_NOTHING */

result = multi_follow(data, handler, newurl, follow);

if(!result) {

multistate(data, MSTATE_SETUP);

rc = CURLM_CALL_MULTI_PERFORM;

}

}

else {

/* after the transfer is done, go DONE */

/* but first check to see if we got a location info even though we are

not following redirects */

if(data->req.location) {

free(newurl);

newurl = data->req.location;

data->req.location = NULL;

result = multi_follow(data, handler, newurl, FOLLOW_FAKE);

if(result) {

*stream_errorp = TRUE;

result = multi_done(data, result, TRUE);

}

}

if(!result) {

multistate(data, MSTATE_DONE);

rc = CURLM_CALL_MULTI_PERFORM;

}

}

}

else if(data->state.select_bits && !Curl_xfer_is_blocked(data)) {

/* This avoids CURLM_CALL_MULTI_PERFORM so that a very fast transfer does

not get stuck on this transfer at the expense of other concurrent

transfers */

Curl_expire(data, 0, EXPIRE_RUN_NOW);

}

free(newurl);

*resultp = result;

return rc;

}

|

state_ratelimiting 处理需要限速的情况

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

static CURLMcode state_ratelimiting(struct Curl_easy *data,

struct curltime *nowp,

CURLcode *resultp)

{

CURLcode result = CURLE_OK;

CURLMcode rc = CURLM_OK;

DEBUGASSERT(data->conn);

/* if both rates are within spec, resume transfer */

if(Curl_pgrsUpdate(data))

result = CURLE_ABORTED_BY_CALLBACK;

else

result = Curl_speedcheck(data, *nowp);

if(result) {

if(!(data->conn->handler->flags & PROTOPT_DUAL) &&

result != CURLE_HTTP2_STREAM)

streamclose(data->conn, "Transfer returned error");

multi_posttransfer(data);

multi_done(data, result, TRUE);

}

else {

timediff_t recv_timeout_ms = 0;

timediff_t send_timeout_ms = 0;

if(data->set.max_send_speed)

send_timeout_ms =

Curl_pgrsLimitWaitTime(&data->progress.ul,

data->set.max_send_speed,

*nowp);

if(data->set.max_recv_speed)

recv_timeout_ms =

Curl_pgrsLimitWaitTime(&data->progress.dl,

data->set.max_recv_speed,

*nowp);

if(!send_timeout_ms && !recv_timeout_ms) {

multistate(data, MSTATE_PERFORMING);

Curl_ratelimit(data, *nowp);

/* start performing again right away */

rc = CURLM_CALL_MULTI_PERFORM;

}

else if(send_timeout_ms >= recv_timeout_ms)

Curl_expire(data, send_timeout_ms, EXPIRE_TOOFAST);

else

Curl_expire(data, recv_timeout_ms, EXPIRE_TOOFAST);

}

*resultp = result;

return rc;

}

|

算法逻辑

curl 的限速使用滑动窗口算法实现。Curl_pgrsStartNow 初始化滑动窗口相关算法。Curl_pgrsLimitWaitTime 计算了需要等待多久可以达到预期的限速。实际执行过程中 (state_performing) 计算是否需要限速,当需要限速时等待 Curl_pgrsLimitWaitTime 返回的预期等待时间。然后恢复读写 socket。

大概的伪代码如下:

1

2

3

4

5

6

7

8

9

10

11

|

limit = "10kb/s"

Curl_pgrsStartNow(...)

for {

if limitWait := Curl_pgrsLimitWaitTime(...); limitWait > 0 {

sleep(limitWait)

}

state_performing(...)

}

|

全局搜索 SO_RCVBUF 未找到,可以说明 curl 未使用设置发送/接收窗口的方式做限速。

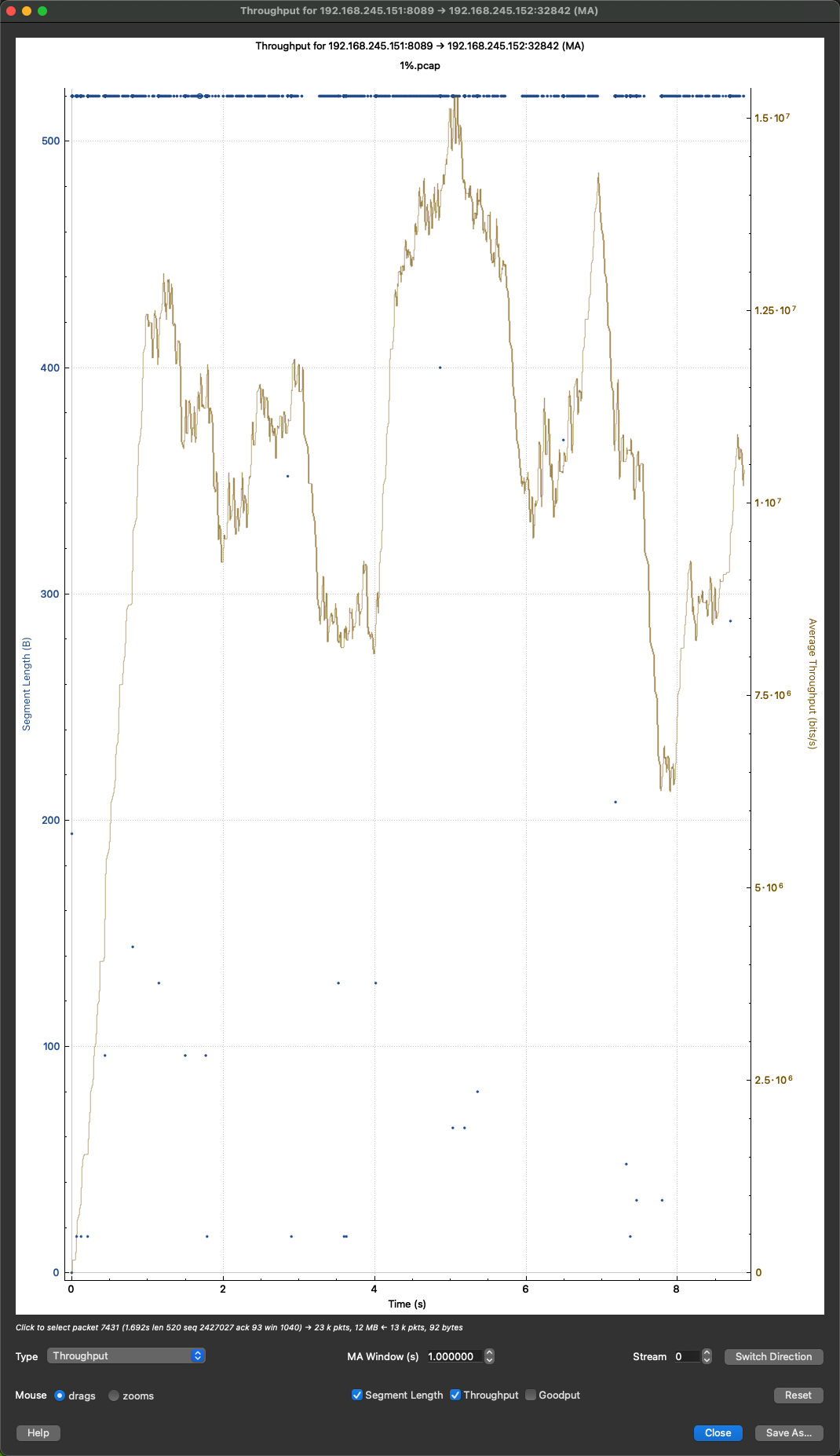

实际抓包看看

执行如下命令抓包看看实际的限速行为是什么样的:

1

|

curl http://mirrors.tuna.tsinghua.edu.cn/ubuntu-releases/24.04.2/ubuntu-24.04.2-live-server-amd64.iso -k -v --output t.iso --limit-rate 200K

|

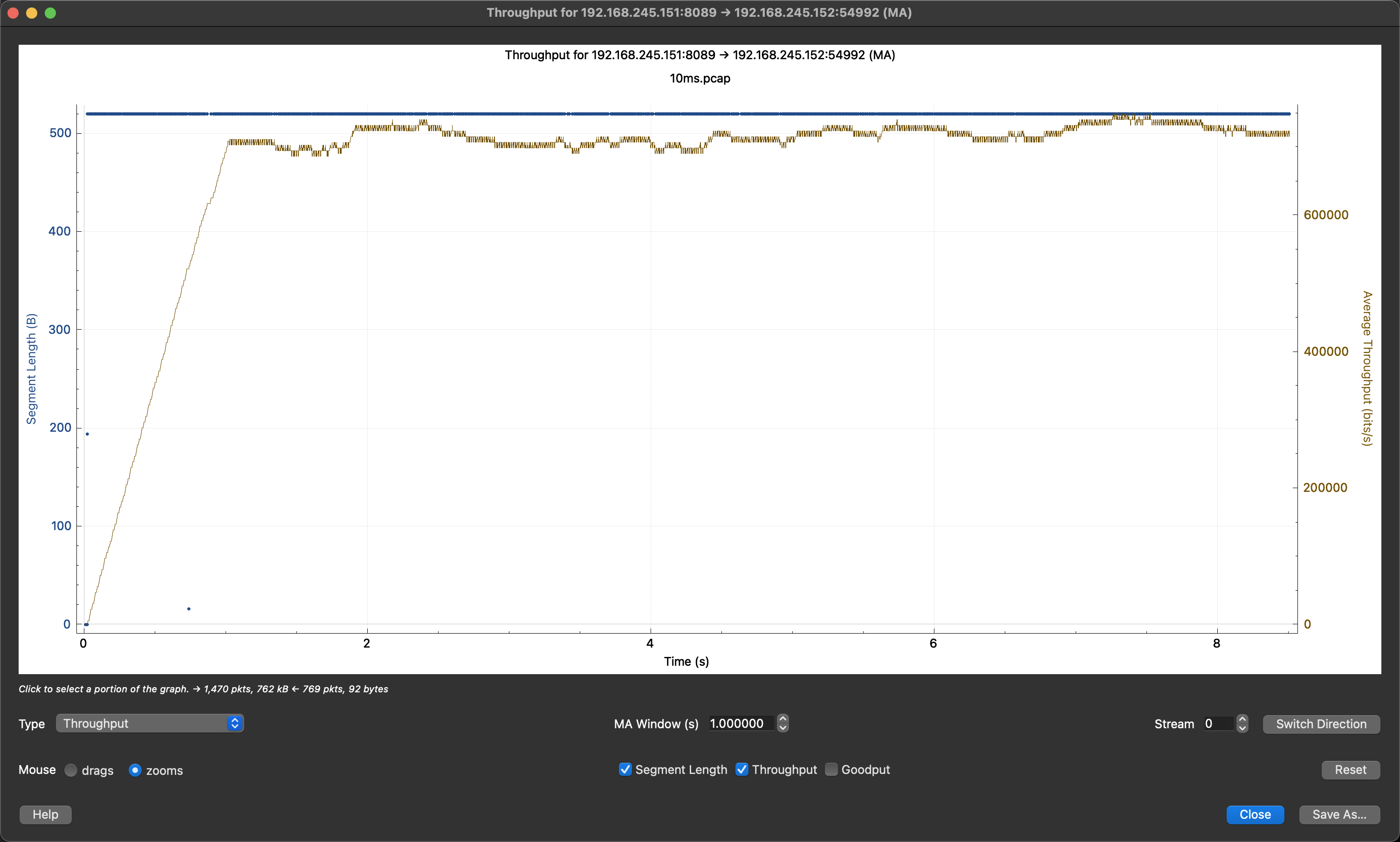

开始瞬间的吞吐非常高 4638 KB/s,后续几个峰值比较高,1220 KB/s 到 2441KB/s;整体平均下来 233 KB/s,瞬时吞吐非常高 4638 KB/s。进一步证实,没有通过设置 SO_RCVBUF/SO_SNDBUF 控制发送/接收窗口的方式控制网速。

调用流程

自己实现一个类似的限速

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

|

package main

import (

"context"

"fmt"

"io"

"net"

"net/http"

"os"

"time"

)

const (

BufferSize = 1024

)

// GOARCH=arm64 GOOS=linux go build -o golimitclient labs/network/BDP-buffer-RT/golimitclient/main.go

func main() {

tcpdialer := &net.Dialer{

Timeout: 30 * time.Second,

KeepAlive: 30 * time.Second,

}

transport := &http.Transport{

Proxy: http.ProxyFromEnvironment,

DialContext: func(ctx context.Context, network, addr string) (net.Conn, error) {

ret, err := tcpdialer.DialContext(ctx, network, addr)

if err != nil {

return nil, err

}

tcpConn, ok := ret.(*net.TCPConn)

if ok {

err := tcpConn.SetReadBuffer(BufferSize)

if err != nil {

return nil, err

}

}

return ret, err

},

ForceAttemptHTTP2: true,

MaxIdleConns: 100,

IdleConnTimeout: 90 * time.Second,

TLSHandshakeTimeout: 10 * time.Second,

ExpectContinueTimeout: 1 * time.Second,

// ReadBufferSize: BufferSize,

}

client := &http.Client{Transport: transport}

response, err := client.Get(fmt.Sprintf("http://%s:8089/test.txt", os.Getenv("VM1")))

check(err)

defer response.Body.Close()

var readTimes int64 = 2000

var readTimesPerSecond int64 = 20

_ = readTimesPerSecond

for i := 0; i < int(readTimes); i++ {

io.CopyN(io.Discard, response.Body, BufferSize)

println(i)

time.Sleep(time.Second / time.Duration(readTimesPerSecond))

}

}

func check(err error) {

if err != nil {

panic(err)

}

}

|

如图,基本可以限速限制在 20k 左右。

在实践中发现,设置 tcp readbuf(tcpConn.SetReadBuffer(BufferSize))是有作用的,不设置最终也会吞吐收敛到 20k 的限速。

结论

- RT 越大,速度越慢

- 丢包越高,速度越慢

- curl 的限速是通过动态限制某段时间内读取到最多多少数据达到限速的效果

吞吐为:2.110**7 bits/s = python3 -c ‘print(2.110**7/8/1024)’ = 2563.48 KB/s

吞吐为:2.110**7 bits/s = python3 -c ‘print(2.110**7/8/1024)’ = 2563.48 KB/s